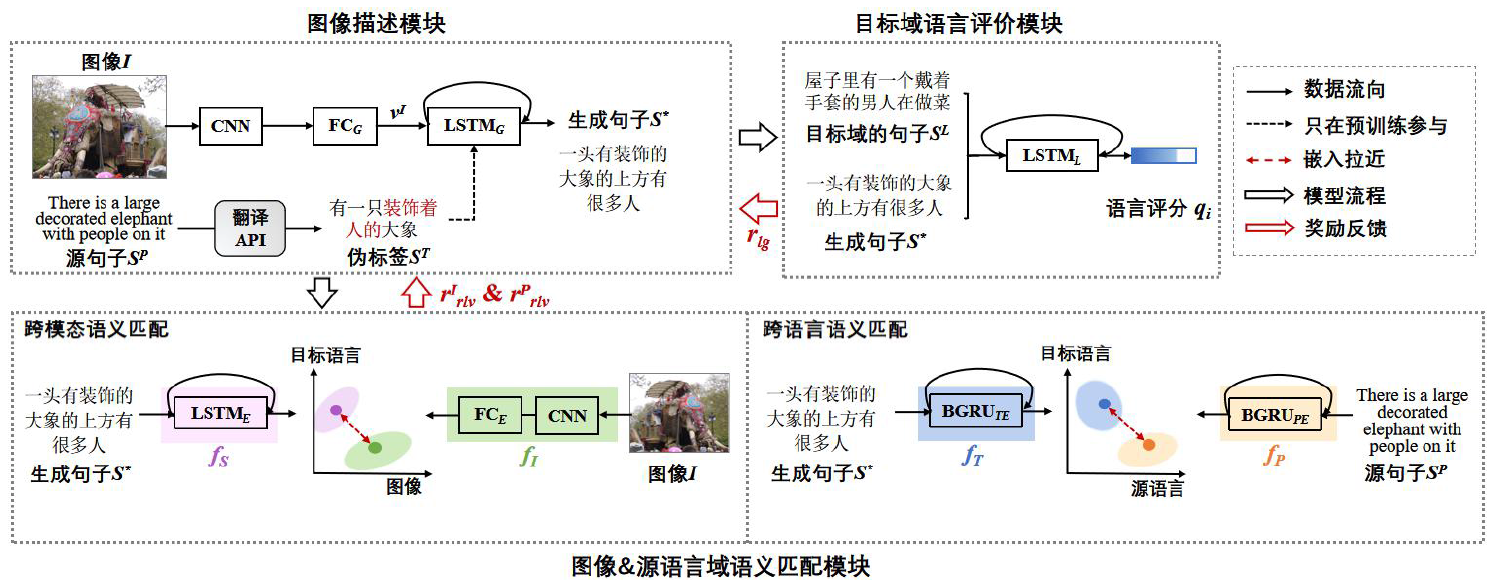

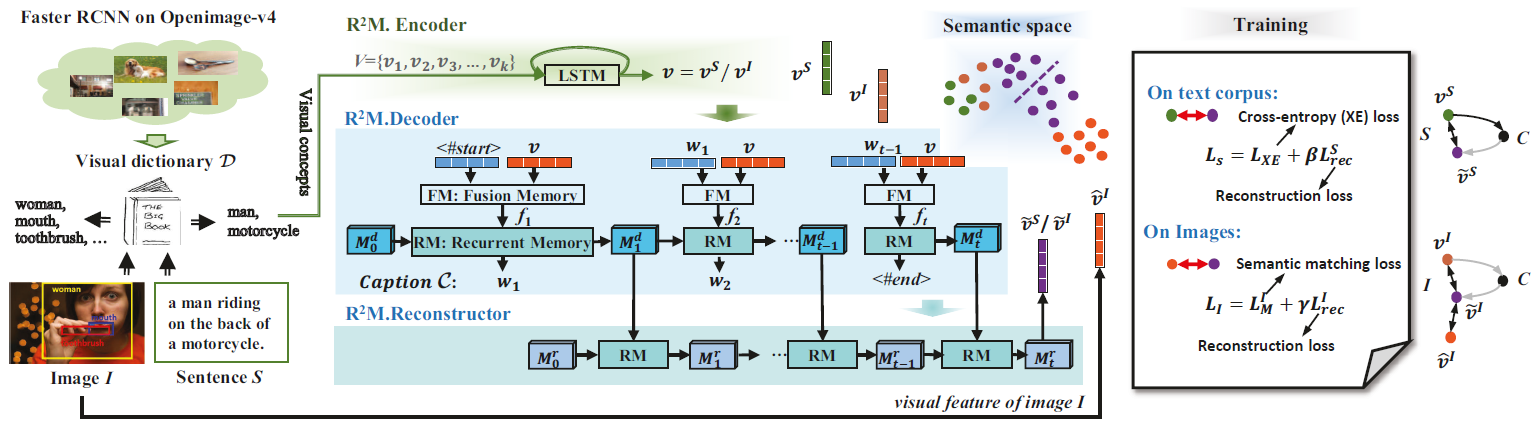

Peipei Song, Dan Guo*, Jun Cheng, and Meng Wang*

IEEE Transactions on Multimedia, 2022

[Paper] [BibTex]

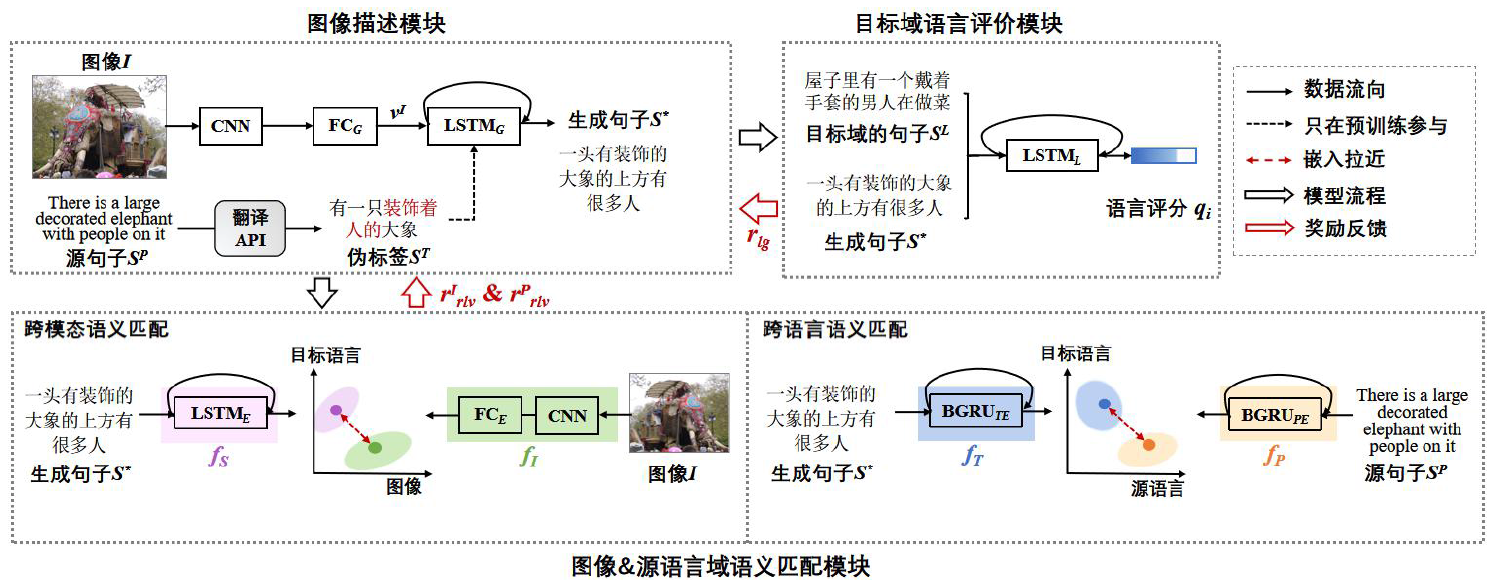

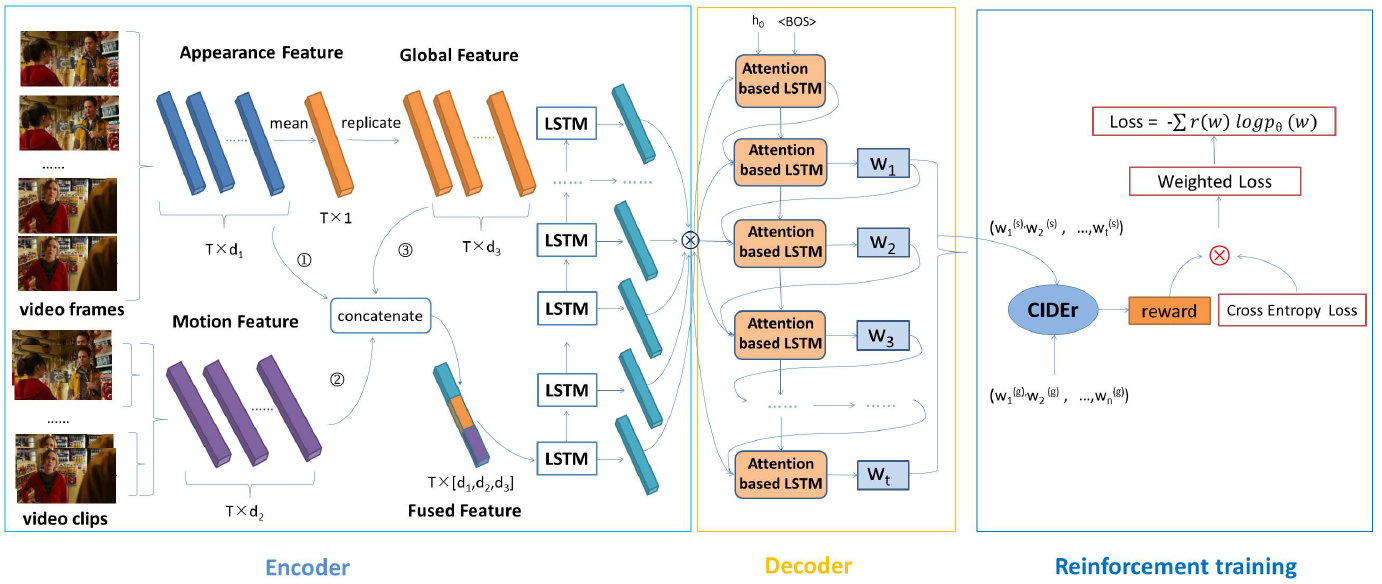

Peipei Song, Dan Guo*, Jinxing Zhou, Mingliang Xu, and Meng Wang*

IEEE Transactions on Cybernetics, 2022

[Paper] [BibTex]

张静, 郭丹*, 宋培培*, 李坤, 汪萌

中国图象图形学报, 2021

[Paper] [BibTex]

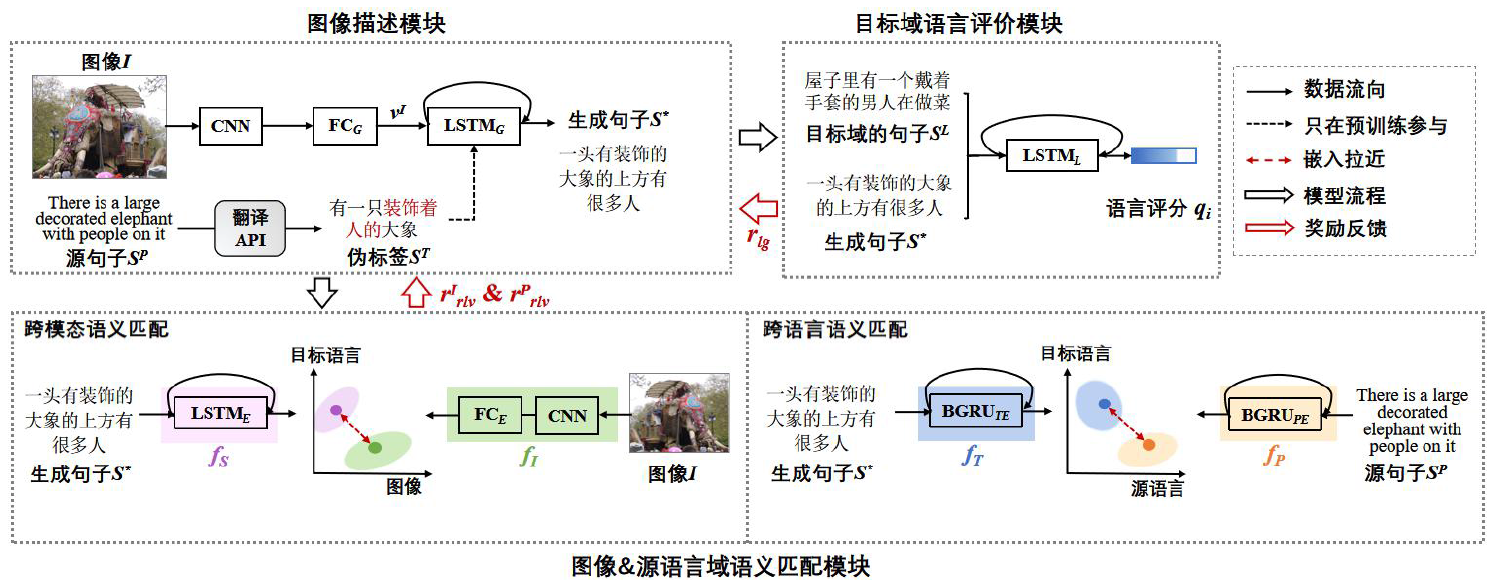

Dan Guo, Yang Wang*, Peipei Song* and Meng Wang

International Joint Conference on Artificial Intelligence (IJCAI), 2020

[Paper] [BibTex]

Yuling Gui, Dan Guo and Ye Zhao

Workshop on Multimedia for Accessible Human Computer Interfaces (MAHCI), 2019

[Paper] [BibTex]